I can’t believe I’ve never found this page on MSDN before (and not for lack of searching). It’s a mapping between SSIS data types and underlying types in common providers like SQL and Oracle:

Tuesday, December 15, 2009

Thursday, December 10, 2009

SharePoint 2010 Beta 2 EULA: No Go-Live Licence

There’s been a bit of confusion at work over whether the current SharePoint 2010 Public Beta (beta 2) does or doesn’t have a ‘go-live’ licence attached to it. In case others are wondering the same thing, here are the relevant sections copied straight out of the EULA when I installed it:

MICROSOFT PRE-RELEASE SOFTWARE LICENSE TERMS

MICROSOFT VERSION 2010 SERVER SOFTWARE…

1. INSTALLATION AND USE RIGHTS.

• You may install and test any number of copies of the software on your premises.

• You may not test the software in a live operating environment unless Microsoft permits you to do so under another agreement.

…

3. TERM. The term of this agreement is until 31/10/2010, or commercial release of the software, whichever is first.

…

8. SUPPORT SERVICES. Because this software is “as is,” we may not provide support services for it.

So… no. Well maybe. There’s no go-live that comes with it, but the option to go-live if separately approved is explicitly left open, and I have been told that’s exactly what some early adopters are doing. What you have to do to get said approval is unknown to me: there was a Technology Adopter Program (TAP), which is where Beta 1 went, so they’d obviously be candidates, or maybe it’s just a standard wording.

Also any number of people have blogged complaining about the lack of a migration path from the public beta to RTM. The only public statements to this effect I can find are on the SharePoint Team Blog:

Is the SharePoint public beta supported?

The SharePoint public beta is not supported. However, we recommend looking at our resources listed above and asking questions in the SharePoint 2010 forums.Will there be a migration path from SharePoint public beta to final release?

We do not plan to support a SharePoint 2010 public beta to release bits migration path. The SharePoint 2010 public beta should be used for evaluation and feedback purposes only.

…and in the MSDN forums (see Jei Li’s reply):

Upgrade from SharePoint Server Public Beta to RTM (in-place, or database attach) for general public will be blocked. For exceptions, we will support those who hold a "Go Live" license, they were clearly communicated, signed a contract, and should know their upgrade process would be supported by CSS.

Which again suggests the possibility of TAP program members being supported into production with (predictably) a different level of support than a regular beta user.

Monday, November 30, 2009

Bugs, Betas and Go-Live Licences

.Net 4 and Visual Studio 2010 are currently available in Beta 2, with a Go-Live licence. So now’s a great time to download them, play with the new features, and raise Connect issues about the bugs you find, right?

Wrong.

These products are done. Baked. Finished[1]. It’s sad, but true, that generally by the time you start experimenting with a beta it’s already too late to get the bugs fixed. Raise all the Connect issues you want: your pet fix may make it into 2013 if you are lucky. Eric Lippert put it pretty well recently:

FYI, C# 4 is DONE. We are only making a few last-minute "user is electrocuted"-grade bug fixes, mostly based on your excellent feedback from the betas. (If you have bug reports from the beta, please keep sending them, but odds are good they won't get fixed for the initial release.)

Sadly, if you are playing with the betas, you are better off making mental notes of how to avoid what problems you do find, or praying any serious ones already got fixed / are getting fixed right now (eg: performance, help, blurry text, etc…)

[1] I’m exaggerating to make a point here, and please don’t think I have an inside track on this stuff, because I don’t.

Saturday, November 14, 2009

What’s New in Windows Forms for the .Net Framework 4.0

Um. Well…

Nothing.

At least as far as one can tell from the documentation anyway. Check out the following:

What’s New in the .Net Framework Version 4 (Client)

…and compare with previous versions: [Search:] What’s New in Windows Forms

Ok, so I should really do a Reflector-compare on the assemblies and see if there really are absolutely no changes but the fact there’s not one trumpeted new feature speaks volumes about where Microsoft see the future of the client GUI, and they seem prepared to put that message across fairly bluntly. So much for whatever-they-said previously about ‘complimentary technologies’ (or something?)

(Albeit, all this is beta 2 doco, subject to change blah blah)

Monday, November 09, 2009

Performance Point 2010

Looks like the details are starting to come out now, and (predictably) it looks like the lions share of the effort has been the full SharePoint integration, and not really any major new features (or even old ProClarity features re-introduced) bar a basic decomposition tree.

That’s probably quite a negative assessment: there are lots of tweaks and improvements. In particular I was excited by PP now honouring Analysis Services Conditional Formatting, though I try not to wonder why it wasn’t there before. What I’ve not seen anywhere is if you’ve now got any control over individual series colours in charts. Due to PerformancePoint’s dynamic nature this is a tricky request, but its absence was a show-stopper for us last time I used it. I guess one day I will just have to sit down with a beta and find out.

Personally I’m not sold on the SharePoint integration strategy, but from where PerformancePoint was (totally dependent on SharePoint but not well integrated) it makes a lot of sense. But you can’t help but thinking Microsoft have burnt a whole product cycle just getting the fundamentals right. “This version: like the last should have been” is the all-too-familiar bottom line.

Friday, November 06, 2009

Report a Bug

Out of interest this morning I clicked on the ‘Report a Bug’ button in MSDN Library (offline version), to see what it did:

It goes to a Connect page that generates a bug for you so you’ve got something to report. That’s pretty damn clever, I thought.

Thursday, November 05, 2009

WM_TOUCH

So finally some time last week HP shipped to web the final 64bit NTrig drivers for my TX2, and I now have a working Windows 7 Multitouch device. Sure, candidate drivers have been available from the NTrig site for ages, but I had issues with ghost touches, so they got uninstalled within a day or so as the page I was browsing kept scrolling off…

Something still bugs me – which his that if the final drivers only went to web last week, how come they’re already selling the TX2 with Windows 7 in Harvey Norman? The 32 bit driver’s still not there for download today (1/11/09). For HP to be putting drivers on shipping PCs prior to releasing them to existing owners seems bizarre, if not downright insulting.

But I digress. Check this out:

Ok, it’s not my best work. But the litmus test of working multitouch is, amazingly, Windows Paint. If you can do this with two fingers, you are off and running (fingers not shown in screenshot – sorry).

So finally I can play touch properly, Win 7 style. And it rocks.

I am, for example, loving the inertia-compliant scrolling support in IE, which totally refutes my long-held believe that a physical scroll wheel is required on tablets, and makes browsing through long documents a joy. It alone would justify the Win 7 upgrade cost for a touch-tablet user. It’s not all plain sailing: I’m sure Google Earth used to respond to ‘pinch zoom’ under the NTrig Vista drivers (which handled the gestures themselves, so presumably sent the app a ‘zoom’ message like what your keyboard zoom slider would produce), but doesn’t in Win 7, at least until they implement the proper handling. But in most cases ‘things just work’ thanks to default handling of unhandled touch-related windows messages (eg unhandled touch-pan gesture messages will cause a scroll message to get posted, which is more likely to be supported).

But how to play with this yourself, using .Net?

In terms of hardware we are in early adopter land big time. The HP TX2 is half the price of the Dell XT2, and there’s that HP desktop too. Much more interesting is Wacom entering the fray with multi-touch on their new range of Bamboo tablets, including the Bamboo Fun. This is huge: at that price point ($200 USD) Wacom could easily account for the single largest touch demographic for the next few years, and ‘touching something’ has some distinct advantages over ‘touching the screen’: you can keep your huge fancy monitor, use touch at a bigger distance and avoid putting big smudges on whatever you’re looking at. (If anyone made a cheap input tablet that was also a low-rez display/SideShow device you’d get the best of both worlds of course). Finally, there is at least one project in beta to use two mice instead of a multitouch input device, which is (I believe) something that the Surface SDK already provides.

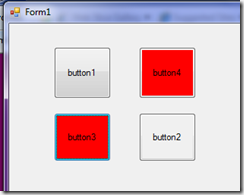

SDK-wise, unfortunately the Windows API Code Pack doesn’t help here, so we are off into Win32-land. And whilst there are some good explanatory articles around, they’re mostly C++, some are outdated from early Win 7 builds, and some are just plain incorrect (wrong interop signature in one case, which – from experience – is a real nasty to get caught by, since it might not crash in Debug builds). The best bet seems to be the fairly decent MSDN documentation, and particularly the samples in the Windows 7 SDK, which – if nothing else – is a good place to copy the interop signatures / structures from (since they’re not up on the P/Invoke Wiki yet). But just to get something very basic up and running doesn’t take that long:Again, without the fingers it’s a bit underwhelming, but what’s going on here is that those buttons light up when there’s a touch input detected over them, and I have two fingers on the screen. Here’s the guts:

protected override void WndProc(ref Message m)

{

foreach (var touch in _lastTouch)

{

if (DateTime.Now - touch.Value > TimeSpan.FromMilliseconds(500))

touch.Key.BackColor = DefaultBackColor;

}

var handled = false;

switch (m.Msg)

{

case NativeMethods.WM_TOUCH:

{

var inputCount = m.WParam.ToInt32() & 0xffff;

var inputs = new NativeMethods.TOUCHINPUT[inputCount];

if (!NativeMethods.GetTouchInputInfo(m.LParam, inputCount, inputs, NativeMethods.TouchInputSize))

{

handled = false;

}else

{

foreach (var input in inputs)

{

//Trace.WriteLine(string.Format("{0},{1} ({2},{3})", input.x, input.y, input.cxContact, input.cyContact));

var correctedPoint = this.PointToClient(new Point(input.x/100, input.y/100));

var control = GetChildAtPoint(correctedPoint);

if (control != null)

{

control.BackColor = Color.Red;

_lastTouch[control] = DateTime.Now;

}

}

handled = true;

}

richTextBox1.Text = inputCount.ToString();

}

break;

}

base.WndProc(ref m);

if (handled)

{

// Acknowledge event if handled.

m.Result = new System.IntPtr(1);

}

}

Now I’ll have to have a look at inertia, and WPF. I hear there’s native support for touch in WPF 4, so there’s presumably some managed-API goodness in .Net 4 for all this too, not just through the WPF layer. Which is just as well, because getting it working under WPF 3.5 looks a bit hairy (no WndProc to overload, which is a bad start…)

Tuesday, November 03, 2009

Monday, October 12, 2009

Taming the APM pattern with AsyncAction/AsyncFunc

Ok, so in the previous post I talked about how nesting asynchronous operations using the APM pattern quickly turns into a world of pain if the operation is implemented on a class that’s new’d up per call in your method. But I’ll recap for the sake of clarity.

Easy Case

Implementation of the async operation is ‘on’ your instance, either directly or through composition:

public IAsyncResult BeginDoSomething(int input, AsyncCallback callback, object state)

{

return _command.BeginInvoke(input, callback, state);

}

public string EndDoSomething(IAsyncResult result)

{

return _command.EndInvoke(result);

}

And you are done! Nothing more to see here. Keep moving.

Hard Case

Implementation of the async operation is on something you ‘new-up’ for the operation, like a SQL command or somesuch:

public IAsyncResult BeginDoSomething(int input, AsyncCallback callback, object state)

{

var command = new SomeCommand();

return command.BeginDoSomethingInternal(input, callback, state);

}

public string EndDoSomething(IAsyncResult result)

{

// we are screwed, since we don't have a reference to 'command' any more

throw new HorribleException("Argh");

}

Note the comment in the EndDoSomething method. Also note that most of the ‘easy’ ways to get around this either break the caller, fail if callback / state are passed as null / are non-unique, introduce race conditions or don’t properly support all of the ways you can complete the async operation (see previous post for more more details).

Pyrrhic Fix

I got it working using using an AsyncWrapper class and a bunch of state-hiding-in-closures. But man it looks like hard work:

public IAsyncResult BeginDoSomething(int value, AsyncCallback callback, object state)

{

var command = new SomeCommand();

AsyncCallback wrappedCallback = null;

if (callback != null)

wrappedCallback = delegate(IAsyncResult result1)

{

var wrappedResult1 = new AsyncResultWrapper(result1, state);

callback(wrappedResult1);

};

var result = command.BeginDoSomethingInternal(value, wrappedCallback, command);

return new AsyncResultWrapper(result, state);

}

public string EndDoWork(IAsyncResult result)

{

var state = (AsyncResultWrapper)result;

var command = (SomeCommand)state.InnerResult.AsyncState;

return command.EndDoSomething(state.InnerResult);

}

Just thinking about cut-and-pasting that into all my classes proxies makes my head hurt. There must be a better way. But there wasn’t. So…

AsyncAction<T> / AsyncFunc<T,Rex>

What I wanted was some way to wrap all this up, and provide an ‘easy’ API to implement this (‘cuz we got lots of them to do). Here’s what the caller now sees:

public IAsyncResult BeginDoSomething(int input, AsyncCallback callback, object state)

{

var command = new SomeCommand();

var asyncFunc = new AsyncFunc<int, string>(

command.BeginDoSomethingInternal,

command.EndDoSomething,

callback, state);

return asyncFunc.Begin();

}

public string EndDoSomething(IAsyncResult result)

{

var asyncFuncState = (IAsyncFuncState<string>)result;

return asyncFuncState.End();

}

I think that’s a pretty major improvement personally. For brevity I won’t post all the implementation code here, but from the AsyncWrapper implementation above and the signature you can pretty much bang it together in a few mins. It’s surprisingly easy when you know what you are aiming for.

Curried Lama

Now actually in my usage I needed something a bit more flash, where the instance of the object that the Begin method was to execute on would be determined ‘late on’, rather than frozen into the constructor. So I ended up with something looking a bit more like this:

var asyncFunc = new AsyncFunc<SomeCommand, int, string>(

c => c.BeginDoSomethingInternal,

c => c.EndDoSomethingInternal,

callback, state);

// Later on...

var command = new SomeCommand();

return asyncFunc.Begin(command);

(The idea is that a series of these can get stored in a chain, and executed one-by-one, with ‘command’ actually replaced by a state machine – i.e. each operation gets to execute against the current state object in the state machine at the that operation executes)

This results in some crazy signatures in the actual AsyncFunc class, the kind that keep Mitch awake at night muttering about the decay of modern computer science:

public AsyncFunc(

Func<TInstance, Func<TInput, AsyncCallback, object, IAsyncResult>> beginInvoke,

Func<TInstance, Func<IAsyncResult, TReturn>> endInvoke,

AsyncCallback callback,

object state

)

…but it was that or:

var asyncFunc = new AsyncFunc<SomeCommand, int, string>(

(c,a,cb,s) => c.BeginDoSomethingInternal(a,cb,s),

(c,ar) => c.EndDoSomethingInternal(ar),

callback, state);

…which is just more fiddly typing for the user, not the implementer. And it made for some funky currying for overloaded versions of the ctor where you wanted to pass in a ‘flat’ lambda:

_beginInvoke = (i) => (a,c,s) => beginInvoke(i,a,c,s);

Best keep quiet about that I think.

Since this is the async equivalent of Func<TArg,TRet>, you are probably wondering about async version of Action<TArg> and yes there is one of those. And of course, if you want more than one argument (Action<TArg1,TArg2>) you will need yet another implementation. That really sucks, but I can’t see us getting generic-generics any time soon.

I was talking to Joe Albahari about this at TechEd and he suggested that I was attempting to implement Continuations, which wasn’t how I’d been thinking about it but is about right. But I’d need to post about calling a series of these using AsyncActionQueue<TInstance, TArg> to really explain that one.

PS: As it turns out, this looks a fair bit like some of the Task.FromAsync factory methods in .Net 4:

…but I didn’t know that at the time. Yeah, you can chain those too. Grr. It’s just yet another example of why .Net 4 should have come out a year ago so I could be using it on my current project. And don’t even mention covariance to me.

PPS: Have I totally lost it this time?

Monday, September 28, 2009

Implementing Asynchronous Operations in WCF

Just as the WCF plumbing enables clients to call server operations asynchronously, without the server needing to know anything about it, WCF also allows service operations to be defined asynchronously. So an operation like:

[OperationContract]

string DoWork(int value);

…might instead be expressed in the service contract as:

[OperationContract(AsyncPattern = true)]

IAsyncResult BeginDoWork(int value, AsyncCallback callback, object state);

string EndDoWork(IAsyncResult result);

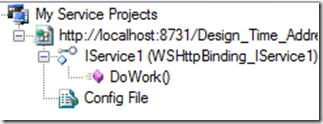

Note that the two forms are equivalent, and indistinguishable in the WCF metadata: they both expose an operation called DoWork[1]:

... but in the async version you are allowing the original WCF dispatcher thread to be returned to the pool (and hence service further requests) while you carry on processing in the background. When your operation completes, you call the WCF-supplied callback, which then completes the request by sending the response to the client down the (still open) channel. You have decoupled the request lifetime from that of the original WCF dispatcher thread.

From a scalability perspective this is huge, because you can minimise the time WCF threads are tied up waiting for your operation to complete. Threads are an expensive system resource, and not something you want lying around blocked when they could be doing other useful work.

Note this only makes any sense if the operation you’re implementing really is asynchronous in nature, otherwise the additional expense of all this thread-switching outweighs the benefits. Wenlong Dong, one of the WCF team, has written a good blog article about this, but basically it all comes down to I/O operations, which are the ones that already have Begin/End overloads implemented.

Since Windows NT, most I/O operations (file handles, sockets etc…) have been implemented using I/O Completion Ports (IOCP). This is a complex subject, and not one I will pretend to really understand, but basically Windows already handles I/O operations asynchronously, as IOCPs are essentially work queues. Up in .Net land, if you are calling an I/O operation synchronously, someone, somewhere, is waiting on a waithandle for the IOCP to complete before freeing up your thread. By using the Begin/End methods provided in classes like System.IO.FileStream, System.Net.Socket and System.Data.SqlClient.SqlCommand, you start to take advantage of the asynchronicity that’s already plumbed into the Windows kernel, and you can avoid blocking that thread.

So how to get started?

Here’s the simple case: a WCF service with an async operation, the implementation of which calls an async method on an instance field which performs the actual IO operation. We’ll use the same BeginDoWork / EndDoWork signatures we described above:

public class SimpleAsyncService : IMyAsyncService

{

readonly SomeIoClass _innerIoProvider = new SomeIoClass();

public IAsyncResult BeginDoWork(int value, AsyncCallback callback, object state)

{

return _innerIoProvider.BeginDoSomething(value, callback, state);

}

public string EndDoWork(IAsyncResult result)

{

return _innerIoProvider.EndDoSomething(result);

}

}

I said this was simple, yes? All the service has to do to implement the Begin/End methods is delegate to the composed object that performs the underlying async operation.

This works because the only state the service has to worry about – the ‘SomeIoClass’ instance – is already stored on one of its instance fields. It’s the caller’s responsibility (i.e. WCF’s) to call the End method on the same instance that the Begin method was called on, so all our state management is taken care of for us.

Unfortunately it’s not always that simple.

Say the IO operation is something you want to / have to new-up each time it’s invoked, like a SqlCommand that uses one of the parameters (or somesuch). If you just modify the code to create the instance:

public IAsyncResult BeginDoWork(int value, AsyncCallback callback, object state)

{

var ioProvider = new SomeIoClass();

return ioProvider.BeginDoSomething(value, callback, state);

}

…you are instantly in a world of pain. How are you going to implement your EndDoWork method, since you just lost the reference to the SomeIoClass instance you called BeginDoSomething on?

What you really want to do here is something like this:

public IAsyncResult BeginDoWork(int value, AsyncCallback callback, object state)

{

var ioProvider = new SomeIoClass();

return ioProvider.BeginDoSomething(value, callback, ioProvider); // !

}

public string EndDoWork(IAsyncResult result)

{

var ioProvider = (SomeIoClass) result.AsyncState;

return ioProvider.EndDoSomething(result);

}

We’ve passed the ‘SomeIoClass’ as the state parameter on the inner async operation, so it’s available to us in the EndDoWork method by casting from the AsyncResult.AsyncState property. But now we’ve lost the caller’s state, and worse, the IAsyncResult we return to the caller has our state not their state, so they’ll probably blow up. I know I did.

One possible fix is to maintain a state lookup dictionary, but what to key it on? Both the callback and the state may be null, and even if they’re not, there’s nothing to say they have to be unique. You could use the IAsyncResult itself, on the basis that almost certainly is unique, but then there’s a race condition: you don’t get that until after you call the call, by which time the callback might have fired. So your End method may get called before you can store the state into your dictionary. Messy.

Really what you’re supposed to do is implement your own IAsyncResult, since this is only thing that’s guaranteed to be passed from Begin to End. Since we’re not actually creating the real asynchronous operation here (the underlying I/O operation is), we don’t control the waithandle, so the simplest approach seemed to be to wrap the IAsyncResult in such a way that the original caller still sees their expected state, whilst still storing our own.

I’m not sure if there’s another way of doing this, but this is the only arrangement I could make work:

public IAsyncResult BeginDoWork(int value, AsyncCallback callback, object state)

{

var ioProvider = new SomeIoClass();

AsyncCallback wrappedCallback = null;

if (callback!=null)

wrappedCallback = delegate(IAsyncResult result1)

{

var wrappedResult1 = new AsyncResultWrapper(result1, state);

callback(wrappedResult1);

};

var result = ioProvider.BeginDoSomething(value, wrappedCallback, ioProvider);

return new AsyncResultWrapper(result, state);

}

Fsk!

Note that we have to wrap the callback, as well as the return. Otherwise the callback (if present) is called with a non-wrapped IAsyncResult. If you passed the client’s state as the state parameter, then you can’t get your state in EndDoWork(), and if you passed your state then the client’s callback will explode. As will your head trying to follow all this.

Fortunately the EndDoWork() just looks like this:

public string EndDoWork(IAsyncResult result)

{

var state = (AsyncResultWrapper) result;

var ioProvider = (SomeIoClass)state.PrivateState;

return ioProvider.EndDoSomething(result);

}

And for the sake of completeness, here’s the AsyncResultWrapper:

private class AsyncResultWrapper : IAsyncResult

{

private readonly IAsyncResult _result;

private readonly object _publicState;

public AsyncResultWrapper(IAsyncResult result, object publicState)

{

_result = result;

_publicState = publicState;

}

public object AsyncResult

{

get { return _publicState; }

}

public object PrivateState

{

get { return _result.AsyncResult; }

}

// Elided: Delegated implementation of IAsyncResult using composed IAsyncResult

}

By now you are thinking ‘what a mess’, and believe me I am right with you there. It took a bit of trail-and-error to get this working, it’s a major pain to have to implement this for each-and-every nested async call and – guess what – we have lots of them to do. I was pretty sure there must be a better way, but I looked and I couldn’t find one. So I decided to write one, which we’ll cover next time.

Take-home:

Operations that are intrinsically asynchronous – like IO - should be exposed as asynchronous operations, to improve the scalability of your service.

Until .Net 4 comes out, chaining and nesting async operations is a major pain in the arse if you need to maintain any per-call state.

Update: 30/9/2009 – Fixed some typos in the sample code

[1] Incidentally, if you implement both the sync and async forms for a given operation (and keep the Action name for both the same, or the default), it appears the sync version gets called preferentially.

Monday, September 21, 2009

Calling a WCF Service Asynchronously from the Client

In ‘old school’ ASMX web services, the generated proxy allowed you to call a service method asynchronously by using the auto-generated Begin/End methods for the operation in question. This is important for client applications, to avoid blocking the UI thread when doing something as (potentially) slow as a network call.

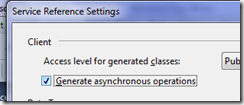

WCF continues this approach: if you use ‘add service reference’ with ‘Generate Asynchronous Operations’ checked (in the Advanced dialog):

... or SvcUtil.exe with the /a (async) option, your client proxy will contain Begin/End methods for each operation, in addition to the original, synchronous version. So for an operation like:

[OperationContract]

string DoWork(int value);

…the proxy-generated version of the interface will contain two additional methods, representing the Asynchronous Programming Model (APM)-equivalent signature:

[OperationContract(AsyncPattern=true)]

IAsyncResult BeginDoWork(int value, AsyncCallback callback, object asyncState);

string EndDoWork(IAsyncResult result); // No [OperationContract] here

All it takes then to call the operation is to call the relevant Begin method, passing in a callback that is used to process the result. The UI thread is left unblocked and your request is executed in the background.

You can achieve the same result[1] with a ChannelFactory, but it takes a bit more work. Typically you use the ChannelFactory directly when you are sharing interface types, in which case you only get Begin/End methods if they are defined on the original interface – the ChannelFactory can’t magically add them. What you can do, however, is create another copy of the interface, and manually implement the additional ‘async pattern’ signatures, as demonstrated above.

Either way, what’s really important to realise here is that the transport is still synchronous. What the Begin/End methods are doing is allowing you to ‘hand off’ the message dispatch to WCF, and be called back when the result returns. And this explains why we can pull the trick above where we change the client’s version of the interface and it all ‘just still works’: from WCF’s perspective the sync and begin/end-pair signatures are considered identical. The service itself has only one operation ‘DoWork’.

And the reverse applies too. If you have a service operation already defined following the APM - i.e. BeginDoWork / EndDoWork - unless you choose to generate the async overloads your client proxy is going to only contain the sync version: DoWork(). Your client is calling – synchronously – an operation expressly defined asynchronously on the server. Weird, yes? But (as we will see later), it doesn’t matter. It makes no difference to the server.

Take-home

Clients should call service operations using async methods, to avoid blocking the UI thread. Whether the service is or isn’t implemented asynchronously is completely irrelevant[2].

[1] For completeness I should point out there are actually two more ways to call the operation asynchronously, but it all amounts to the same thing:

- The generated service proxy client also contains OperationAsync / OperationCompleted method/event pairs. This event-based approach is considered easier to use (I don’t see it personally). Note this is on the generated client, not on the proxy’s version of the interface, so you can’t do this with a ChannelFactory (unless you implement it yourself).

- If you’re deriving from ClientBase directly you can use the protected InvokeAsync method directly. Hardcore! This is how the generated proxy client implements the Async / Completed pattern above.

[2] …and transparent, unless you’re sharing interface types

Sunday, September 20, 2009

Tablet Netbooks

I said back in March that what I really wanted was a 10 hour tablet netbook. Well, they’re starting to come out now:

http://www.dynamism.com/viliv_s7.shtml

It’s actually too small, and not dual core (there must eventually be a netbook version of the Atom 300 yes?), but we’re so close now.

Friday, September 18, 2009

Asynchronous Programming With WCF

I’m currently spending a lot of time exposing intrinsically asynchronous server operations to a client application, and I’ve been really impressed with the way WCF caters for this. Asynchronicity is very important for performance and scalability of high-volume systems, but it’s a confusing area, and often something that’s poorly understood.

So I thought I’d write a series of posts to try and shed some light into these areas, as well as share some of the more interesting things I’ve learnt along the way.

Before we start you’ll have to have at least passing familiarity with the Asynchronous Programming Model (APM), which describes the general rules surrounding the expression of an operation as a pair of Begin/End methods. At very least, you need to get your head around the fact that these signatures:

// sync version

int DoWork();

// async version as Begin/End pair

IAsyncResult BeginDoWork(AsyncCallback callback, object state);

int EndDoWork(IAsyncResult result);

…are equivalent.

Thursday, September 17, 2009

Christmas Come Early for Certifications

At TechEd Australia Microsoft were giving out 25% discount vouchers for certification exams, as part of a ‘get-certified’ push.

From now until Christmas you can also apply for (depending on the exam) 25%-off vouchers via this campaign site:

http://www.microsoft.com/learning/en/us/offers/career.aspx

Doesn’t seem to be any one-per-person restriction either.

Friday, September 11, 2009

TechEdAu 2009: Day 3

Highlights:

- All the parallel tasks support in .Net 4

Lowlights:

- No go-live licence for the parallel tasks support in .Net 4, or the existing CTPs.

- The ‘real world’ EF talk, that blew

Update: Hey, somewhere in these last 3 posts I should have mentioned Windows Identity Foundation, which looked pretty cool (rationalizing all the claims-based identity stuff out of WCF). But I didn’t. So this’ll just have to do.

TechEdAu 2009: Day 2

Highlights:

- MSDeploy!

- Biztalk 2009 projects now essentially .csproj: can build / deploy using MSBuild. Some unit testing.

- The AVICode .Net Management Pack for SCOM 2007. Quite simply awesome.

Somewhere-in-the-middle-lights:

- Windows API code pack: Use XP, Vista, Win7 features easily without interop, just going to cop a whole heap of if(win7) os-sniffing around anything interesting. Didn’t we get really sick of all that browser sniffing stuff?

Lowlights:

- Diagnosing your misbehaving MDX is still too damn hard, even Darren Gosbell’s talk was really good.

- The Claw

Wednesday, September 09, 2009

TechEdAu 2009: Day 1

Highlights:

- Windows 7 Problem Steps Recorder: build-in screen flow capture can be used to troubleshoot – or just document – your own UIs

- SketchFlow: Microsoft getting into the ‘paper-prototyping’ space

- Sql 2008 R2 ‘Database Application Components’ – packages schema, logins and jobs as one deployment unit, mainly intended for dynamic virtualisation, but obvious implications for ‘over the fence’ UAT/Prod deployments (good commentary). And CEP (‘StreamInsight’) for real-time in-memory data analysis.

- Gemini: heterogeneous data query and BI for the Excel guy. I think the business will go nuts, the question is how easy it’ll be to upscale it to SSAS when it becomes necessary. Some suggestion the Gemini code may be used to improve SSAS’s ROLAP performance.

- Dublin: making your WF / WCF apps manageable via IIS manager, and visible to SCOM. One click admin UI to resume suspended workflows

- Workflow: It’s back! And now looking more like SSIS than ever (variables as part of the pipeline etc..). XAML only all the way now, so no more CodeActivities, and no backwards compatibility :-/

- Using SetToString for MDX debugging. Genius.

- WCF for Net 4: Default bindings! Standard endpoints! Default behaviour configurations!

- I should probably mention the free HP Mini netbook as well.

- Printed schedule. At last.

- Passing WCF exam

Lowlights:

- Schedule builder designed for larger screens than HP Mini. Can we just get the data from a feed please?

- Coffee queue

- No pen or paper in bag

- No outbound SMTP from the WiFi (again). IMAP users roll your eyes…

(Update 17/9: Added some hyperlinks in lieu of more detailed explanations. And realized I missed Sql CEP altogether…)

Monday, August 31, 2009

Active Directory Powershell Utils

$ds = new-object system.directoryservices.directorysearcher

function Find-Group($samName){

$ds.filter = ('(samaccountname={0})' -f $samName)

$ds.FindAll()

}

function Find-User($samName){

$ds.filter = ('(samaccountname={0})' -f $samName)

$ds.FindAll()

}

function Find-Members($groupName){

$group = Find-Group $groupName

$group.Properties.member | Get-Entry

}

function Get-Entry($cn){

begin{

if($cn){

new-object System.directoryservices.DirectoryEntry("LDAP://$cn")

}

}

process{

if($_){

new-object System.directoryservices.DirectoryEntry("LDAP://$_")

}

}

end{

}

}

(ok, three functions. Two are the same, I know)

Thursday, August 20, 2009

When to Automate (Revisited)

The classic example of this is a typical data migration effort. Months of work goes into creating a one-off artefact, primarily to ensure the system down-time is minimized. There are other advantages (testing, pre-transition sign-off, auditing), but in most cases these just exist to mitigate against the risk of downtime, so it's still all about the execution interval.

What I'd not really thought about until the other day was that this also applies to refactoring efforts on code. Any large refactoring burns a considerable amount of time just keeping up with the changes people check-in whilst you're still working away. Given a sizable enough refactoring, and enough code-churn, you get to a point beyond which you actually can't keep up, and end up trapped in merge integration hell.

There are two normal responses to this. The first is to keep the size down in the first place, and bite off smaller, more manageable chunks. This can involve some duplication of effort, which is why many team-leads will take the second option: come in in the evening or over the weekend for a big-bang macho refactorfest.

But there is a 'third way': the refactoring can be as big as you like, as long as you keep it brief. If you can take the latest version of the codebase, refactor it and check it in before anyone else commits any more changes then you only have to worry about the pained expressions on the face of your co-workers as the entire solution restructures in front of their eyes. But you'll get that anyway, right?

Right now I've got some 50 projects, spread over 4 solutions, most of which have inconsistent folder name to project names to assemblyname to base namespace, some of which we just want to rename as the solution has matured.

To do this by hand would be an absolute swine of a job, and highly prone to checkin-integration churn (all those merges on .csproj / .sln files! the pain! the pain!). Fortunately however Powershell just eats this kind of stuff up. It's taken the best part of the day to get the script ready, but now it has the whole thing can be done and checked in in some 15 mins, solutions and source control included. And there's no point-of-no-return: any point up till checkin all I've done is improve the script for next time, no time has been wasted.

And by de-costing[1] the whole process, we can do it again some day...

[1] ok, I'm sorry for 'de-costing'. But I struggled to come up with a better term for 'reducing the overhead to next-to-nothing' that wasn't unnecessarily verbose, or had 'demean' connotations.

Monday, August 10, 2009

To Windows 7… and back again

This weekend I uninstalled the RTM of Windows 7.

Whilst I could have probably got the glide pad and sound card working with Vista drivers (had I spent enough time on the Dell website lying about what system I had to find the current versions of drivers, rather than just the aged XP ones listed under Inspiron 9300), what killed it was my Kaiser Baas Dual DVB USB tuner wouldn’t play ball: driver appeared all ok, device recognised etc… but it just wouldn’t detect any TV channels anymore. And for a media centre PC that’s just not on. And with a limited window before it’d miss recording Play School and earn me the scorn of my sons, I pulled the plug and dug out the Vista RTM disk (yeah: do you think I could successfully download the with-SP2 version from MSDN this weekend? Of course not).

This is the only time in living memory I’ve upgraded a PC without pulling the old HD as a fall-back, and predictably it’s the one that bit me in the arse…

Mind you, a fresh install’s made it feel pretty snappy again, Vista or no.

Wednesday, August 05, 2009

Ticks

(No, not the ones you get camping. This is a developer blog).

What’s a Tick? Depends who you ask. ‘Ticks’ are just how a given component chooses to measure time. For example,

DateTime.Now.Ticks = 100 ns ticks, i.e. 10,000,000 ticks/sec

Environment.TickCount = 1 ms ticks sinceprocesssystem start

These are just fine for timing webservices, round-trips to the database and even measuring the overall performance of your system from a use-case perspective (UI click to results back). But for really accurate timings you need to use system ticks, i.e. ticks of the high-resolution timer: System.Diagnostics.Stopwatch.GetTimestamp() [1]. This timer’s frequency (and hence resolution) depends on your system. On mine:

System.Diagnostics.Stopwatch.Frequency = 3,325,040,000 ticks/sec

…which I make to be 0.3ns ticks. So some 300x more accurate than DateTime.Now, and accurate enough to start measuring fine-grained performance costs, like boxing/unboxing vs generic lists.

Clearly it’s overkill to use that level of precision in many cases, but if you’re logging to system performance counters you must use those ‘kind of ticks’, because that’s what counter types like PerformanceCounterType.AverageTimer and PerformanceCounterType.CounterTimer require.

[1] Thankfully since .Net 2, we don’t have to use P/Invoke to access this functionality anymore. On one occasion I mistyped the interop signature (to return void), and created a web-app that ran fine as a debug build, but failed spectacularly when built for release. Took a while to track that one down...

Tuesday, August 04, 2009

GC.Collect: Whatever doesn’t kill you makes you stronger

Just a meme that was bouncing around the office this morning, in relation to the nasty side-effect of calling GC.Collect explicitly in code: promotion of everything that doesn’t get collected.

What this means of course is if you call GC.Collect way before you get any memory pressure, objects will get promoted to L1, then to L2, and quite likely not get cleaned up for a long, long time, if ever.

LLBLGen take note

Monday, July 27, 2009

OneNote Screen Clipping Failing

Since we got these new PC’s at work, we’ve had an issue where we couldn’t take any screen clippings with OneNote (or Alt-Print Screen, or anything actually): the resulting clipping looked like this:

Turns out someone turned the 3GB switch on (to give Visual Studio more headroom on another project[1]). And on a dual-monitor developer PC, one of the consequences is that the amount of memory the system uses to address video memory is going to be tight.

Turned it off: right as rain[2].

Note also that – as far as I can infer – Visual Studio 2008 is not, by default, marked LARGEADDRESSAWARE so wouldn’t have got the extra address space anyway without being mod’d, which strikes me as brave given the potential consequences (read the exercise in the link).

(Update: I checked, and that flag is definitely not on the exe out-of-the-box)

[1] Side note: Good article explaining the benefits from running Visual Studio on a 64 bit OS

[2] PS: Where the heck does that expression come from? Oh

[3] And there’s no LARGEADDRESSAWARE flag on the C# compiler either

Birthday Present

Whilst I was unwrapping my other presents, Microsoft RTM’d Windows 7 and Powershell 2. Just for me! You shouldn’t have, etc… Only, apparently I can’t unwrap it until Aug 6th.

But who to send the ‘thank you’ to?

Wednesday, July 08, 2009

What makes a VS Test Project?

Ever wondered what exactly makes a project a test project from the perspective of the VS IDE? Or – to put it another way – did you ever right click on a test and ‘Run Tests’ wasn’t there?

Turns out it’s just a case of this being in the primary PropertyGroup for the project:

<ProjectTypeGuids>{3AC096D0-A1C2-E12C-1390-A8335801FDAB};{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}</ProjectTypeGuids>

(that and the reference to Microsoft.VisualStudio.QualityTools.UnitTestFramework, but you had that already right?)

Friday, June 19, 2009

Stupid Naming Themes [WiX]

This is WiX:

candle.exe

dark.exe

heat.exe

light.exe

lit.exe

melt.exe

pyro.exe

smoke.exe

torch.exe

Jesus wept. Even the ‘fresh ground .jar of Java beans’ crowd outgrew this childishness…

Wednesday, June 17, 2009

Nasty SSIS 2008 issue with ‘Table or View’ access mode

I’m still not 100% sure I understand this, but here goes:

I swapped one of my packages to use a ‘table or view’ for a data source, rather than (as previously) a named query, and the performance dive-bombed. Think less ‘put the kettle on’, more ‘out for lunch and an afternoon down the pub’.

Profiler shows that when you use ‘table or view’, SSIS executes a select * from that_table_or_view on your behalf. But pasting exactly the same select * from profiler into a management studio window still ran pretty good, and completed the query in 3000-odd reads, compared to the 6 million + that SSIS had burnt before I cancelled it.

The view in question is just a straight join of two tables, fairly simple stuff. But profiler showed that SSIS was getting a very different execution plan from running the query in management studio, joining the tables in the opposite (wrong) order. This presumably explains why the number of reads went from 3000-odd to SSIS’s 6 million+. And why it was running a bit s..l..o..w.

Bizarrely even when I put the same select * back into SSIS using ‘Sql Command’ mode, it still ran quickly, provided there was a line break before the FROM. This made me think there must be a bad execution plan getting used somehow, and that extra whitespace was just enough to avoid it:

Looking closer at profiler, it appear that when using ‘Table or View’ SSIS first issues the same query with a SET ROWCOUNT 1 on, in order to get the metadata. This doesn’t happen when SSIS uses ‘Sql Command’ as its source: it executes sp_prepare and seems to get all its metadata from that.

So the only conclusion I can come to is that the execution plan is being poisoned by executing it with SET ROWCOUNT 1 on, picking a plan that’s more appropriate for one row than many. In ‘Sql Command’ mode this doesn’t appear to be an issue because SSIS gets the metadata a different way.

Which makes me think I will be avoiding ‘table or view’ like the plague from now on.

[Update 2009-06-19]

For the sake of completeness, here are the extra screenshots I wanted to put in originally, but my screen clipper was playing up (yet again).

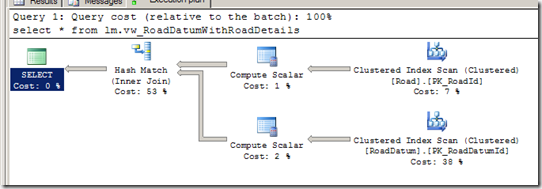

Here’s the ‘right’ version you get from management studio or SSIS in ‘SQL Command’ mode (last two columns are ‘READS’ and ‘DURATION’ respectively):

And here’s what SSIS produces in ‘table or query mode’ (sorry I clipped off the bit above showing it executing the same query with SET ROWCOUNT 1 on, but it did happen):

…a very different plan.

I thought a good proof here would be to log in as a different user (plans are cached per-user), and execute the equivalent of what SSIS produced:

set rowcount 1

select * from lm.vw_RoadDatumWithRoadDetails

go

set rowcount 0

select * from lm.vw_RoadDatumWithRoadDetails

…unfortunately that didn’t seem to work (or rather fail), so there must be something else in the SSIS preamble that’s also involved in this case :-(

However Microsoft UK’s Simon Sabin has already posted a repro based on the Adventure Works 2008 database (which was pretty quick work). He says:

“Nested loop joins don’t perform if you are processing large numbers of rows, do to the lookup nature”

…which is exactly the strategy I see in the ‘bad’ plan above.

So who’s ‘fault’ is all this? You could make a good case for saying that SQL server should play safe and include the ROWCOUNT as part of its ‘does-a-query-plan-match?’ heuristic (along with a basket of other options it already includes), and that would probably be a good thing generally.

But I think the main question has got to be why does SSIS use SET ROWCOUNT at all? Any time I’ve ever wanted to get metadata I’ve always done a SELECT TOP 0 * FROM x. The ‘top’ is part of the query, so already generates a unique query plan, and it’s not like SSIS has to parse and re-write the query to insert the TOP bit : SSIS is already generating that whole query, based on the ‘table or view’ name: adding the ‘top’ clause would be trivial.

I feel a Connect suggestion coming on.

Wednesday, June 10, 2009

Preserving Output Directory Structure With Team Build 2008

The default TFS drop folder scheme is a train-wreck where all the binaries and output files from the entire solution are summarily dumped into a single folder (albeit with some special-case handling for websites). Its just gross.

What I wanted, of course, was a series of folders by project containing only the build artefacts relevant for that project: ‘xcopy ready’ as I like to say. Pretty sensible yes? Quite a popular request if one Googles around: one wonders why the train-wreck scheme was implemented at all. But I digress.

Contrary to what you (and I) may have read, you actually have to do this using AfterBuild, not AfterCompile (which fires way too early in the build process[1]). So the canned solution is:

Put this in your TFSBuild.proj:

<PropertyGroup>

<CustomizableOutDir>true</CustomizableOutDir>

<!-- TEAM PROJECT

(this turns off the ‘train-wreck’ scheme, and goes back to each project’s output going to their bin\debug or whatever folder)

Put this in any project file that you want to capture the output from:

<Target Name="AfterBuild" Condition=" '$(IsDesktopBuild)'!='true' ">

<Message Text="Copying output files from $(OutDir) to $(TeamBuildOutDir)" />

<ItemGroup>

<FilesToCopy Include="$(OutDir)\**\*.*" />

</ItemGroup>

<Copy SourceFiles="@(FilesToCopy)" DestinationFiles="@(FilesToCopy ->'$(TeamBuildOutDir)\$(AssemblyName)\%(RecursiveDir)%(Filename)%(Extension)')" />

</Target>

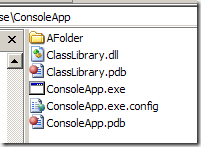

And hey presto:

A series of output folders, by assembly, containing only the artefacts relevant to that assembly, as opposed to the

Note however that with this scheme you get all files marked ‘Copy to Output Directory’ but not files marked as Content, which makes this currently unusable for deploying websites and means it’s not strictly speaking xcopy-ready[2]. Hopefully there is an easy fix to this, otherwise I’ll be diving back into the SNAK codebase where I’ve solved this previously.

[1] before serialization assemblies are generated, and before the obj folder has been copied to the bin folder. A good diagnostic is to put <Exec Command="dir" WorkingDirectory="$(OutDir)"/> into an AfterCompile target, and take a look at what you get back.

[2] I have a big thing about this, which is why I dislike the ClickOnce build output so much. Build and deploy need to be considered separately.

Popular Posts

-

Summary: Even if you think you know what you're doing, it is not safe to store anything in a ThreadStatic member, CallContext or Thread...

-

I love PowerShell, and use it for pretty much everything that I’m not forced to compile. As a result I’ve got fairly competent, and people h...

-

Look, it’s really not that hard. Programs are still in the same place, in %ProgramFiles%, unless you need the 32 bit version, which is in...