Our install process is quite complex, so we use Psake to wrangle it. Integration between the two is relatively straightforward (in essence we just bind $octopusParameters straight onto psake's -properties), and I could see from the stack trace that the failure was actually happening within the PSake module itself. And given the error spat out the variable that caused the issue, I figured it was to do with the variable name.

Most of the variable names are the same as per Octopus Deploy v1, but we do now get some extra ones, in particular the 'Octopus.Environment.MachinesInRole[role]' one. But that's not so different from the type of variables we've always got from Octopus, eg: 'Octopus.Step[0].Name', so what's different?

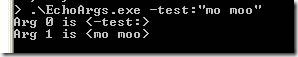

Where psake is failing is where it pushes properties into variable scope for each of the tasks it executes as part of the 'build', and apparently it's choking because test-path doesn't like it. So I put together some tests to exercise test-path with different variable names, and find out when it squealed. This all works against the same code as runs in psake, ie:

test-path "variable:\$key"

| $key | Result |

|---|---|

| a | Ok |

| a.b | Ok |

| a-b | Ok |

| b.a | Ok |

| b-a | Ok |

| a.b.c | Ok |

| a-b-c | Ok |

| c.b.a | Ok |

| c-b-a | Ok |

| a[a] | Ok |

| a[a.b] | Ok |

| a[a-b] | Ok |

| a[b.a] | Ok |

| a[b-a] | Cannot retrieve the dynamic parameters for the cmdlet. The specified wildcard pattern is not valid: a[b-a] |

| a[a.b.c] | Ok |

| a[a-b-c] | Ok |

| a[c.b.a] | Ok |

| a[c-b-b] | Cannot retrieve the dynamic parameters for the cmdlet. The specified wildcard pattern is not valid: a[c-b-b] |

| a[b-b-a] | Ok |

I've highlighted the failure cases, but what's just as interesting is which cases pass. This gives a clue as to the underlying implementation, and why the failure happens.

To cut a long story short, it appears that any time you use square brackets in your variable name, PowerShell uses wildcard matching to parse the content within the brackets. If that content contains a hypen, then the letters before and after the first hyphen are used as a range for matching, and the range end is prior to the range start (ie: alphabetically earlier), you get an error.

Nasty.

It's hard to know who to blame here. Octopus makes those variables based on what roles you define in your environment, though I'd argue the square brackets is potentially a bad choice. PSake is probably partly culpable, though you'd be forgiven for thinking that what they were doing was just fine, and there's no obvious way of supressing the wildcard detection. Ultimately I think this is probably a PowerShell bug. Whichever way you look at it, the chances of me getting it fixed soon are fairly slight.

In this case I can just change all my Octopus role names to use dots not hypens, and I think I'll be out of the woods, but this could be a right royal mess to fix otherwise. I'd probably have to forcefully remove variables from scope just to keep PSake happy, which would be ugly.

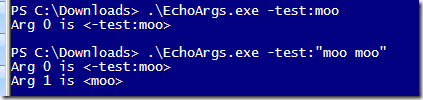

Interestingly the documentation for Test-Path is a bit confused as to whether wildcard matching is or isn't allowed here - the description says they are, but Accept wildcard characters' claims otherwise:

PS C:\> help Test-Path -parameter:Path -PathSpecifies a path to be tested. Wildcards are permitted. If the path includes spaces, enclose it in quotation marks. The parameter name ("Path") is optional. Required? true Position? 1 Default value Accept pipeline input? True (ByValue, ByPropertyName) Accept wildcard characters? false

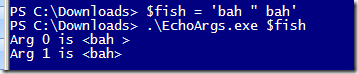

Also interesting is that Set-Variable suffers from the same issue, for exactly the same cases (and wildcarding definitely doesn't make any sense there). Which means you can do this:

${a[b-a]} = 1but not this

Set-Variable 'a[b-a]' 1Go figure.

Update 23/7:

- You can work around this by escaping the square brackets with backticks, eg Set-Variable 'a`[b-a`]'

- I raised a Connect issue for this, because I think this is a bug

- I've raised an issue with Psake on this, because I think they should go the escaping route to work around it.