This is WiX:

candle.exe

dark.exe

heat.exe

light.exe

lit.exe

melt.exe

pyro.exe

smoke.exe

torch.exe

Jesus wept. Even the ‘fresh ground .jar of Java beans’ crowd outgrew this childishness…

Software, Data and Analytics

This is WiX:

candle.exe

dark.exe

heat.exe

light.exe

lit.exe

melt.exe

pyro.exe

smoke.exe

torch.exe

Jesus wept. Even the ‘fresh ground .jar of Java beans’ crowd outgrew this childishness…

I’m still not 100% sure I understand this, but here goes:

I swapped one of my packages to use a ‘table or view’ for a data source, rather than (as previously) a named query, and the performance dive-bombed. Think less ‘put the kettle on’, more ‘out for lunch and an afternoon down the pub’.

Profiler shows that when you use ‘table or view’, SSIS executes a select * from that_table_or_view on your behalf. But pasting exactly the same select * from profiler into a management studio window still ran pretty good, and completed the query in 3000-odd reads, compared to the 6 million + that SSIS had burnt before I cancelled it.

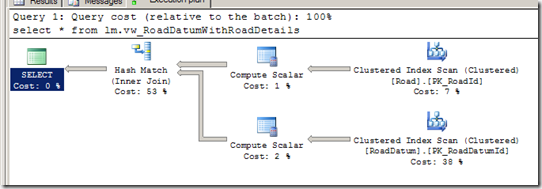

The view in question is just a straight join of two tables, fairly simple stuff. But profiler showed that SSIS was getting a very different execution plan from running the query in management studio, joining the tables in the opposite (wrong) order. This presumably explains why the number of reads went from 3000-odd to SSIS’s 6 million+. And why it was running a bit s..l..o..w.

Bizarrely even when I put the same select * back into SSIS using ‘Sql Command’ mode, it still ran quickly, provided there was a line break before the FROM. This made me think there must be a bad execution plan getting used somehow, and that extra whitespace was just enough to avoid it:

Looking closer at profiler, it appear that when using ‘Table or View’ SSIS first issues the same query with a SET ROWCOUNT 1 on, in order to get the metadata. This doesn’t happen when SSIS uses ‘Sql Command’ as its source: it executes sp_prepare and seems to get all its metadata from that.

So the only conclusion I can come to is that the execution plan is being poisoned by executing it with SET ROWCOUNT 1 on, picking a plan that’s more appropriate for one row than many. In ‘Sql Command’ mode this doesn’t appear to be an issue because SSIS gets the metadata a different way.

Which makes me think I will be avoiding ‘table or view’ like the plague from now on.

[Update 2009-06-19]

For the sake of completeness, here are the extra screenshots I wanted to put in originally, but my screen clipper was playing up (yet again).

Here’s the ‘right’ version you get from management studio or SSIS in ‘SQL Command’ mode (last two columns are ‘READS’ and ‘DURATION’ respectively):

And here’s what SSIS produces in ‘table or query mode’ (sorry I clipped off the bit above showing it executing the same query with SET ROWCOUNT 1 on, but it did happen):

…a very different plan.

I thought a good proof here would be to log in as a different user (plans are cached per-user), and execute the equivalent of what SSIS produced:

set rowcount 1

select * from lm.vw_RoadDatumWithRoadDetails

go

set rowcount 0

select * from lm.vw_RoadDatumWithRoadDetails

…unfortunately that didn’t seem to work (or rather fail), so there must be something else in the SSIS preamble that’s also involved in this case :-(

However Microsoft UK’s Simon Sabin has already posted a repro based on the Adventure Works 2008 database (which was pretty quick work). He says:

“Nested loop joins don’t perform if you are processing large numbers of rows, do to the lookup nature”

…which is exactly the strategy I see in the ‘bad’ plan above.

So who’s ‘fault’ is all this? You could make a good case for saying that SQL server should play safe and include the ROWCOUNT as part of its ‘does-a-query-plan-match?’ heuristic (along with a basket of other options it already includes), and that would probably be a good thing generally.

But I think the main question has got to be why does SSIS use SET ROWCOUNT at all? Any time I’ve ever wanted to get metadata I’ve always done a SELECT TOP 0 * FROM x. The ‘top’ is part of the query, so already generates a unique query plan, and it’s not like SSIS has to parse and re-write the query to insert the TOP bit : SSIS is already generating that whole query, based on the ‘table or view’ name: adding the ‘top’ clause would be trivial.

I feel a Connect suggestion coming on.

The default TFS drop folder scheme is a train-wreck where all the binaries and output files from the entire solution are summarily dumped into a single folder (albeit with some special-case handling for websites). Its just gross.

What I wanted, of course, was a series of folders by project containing only the build artefacts relevant for that project: ‘xcopy ready’ as I like to say. Pretty sensible yes? Quite a popular request if one Googles around: one wonders why the train-wreck scheme was implemented at all. But I digress.

Contrary to what you (and I) may have read, you actually have to do this using AfterBuild, not AfterCompile (which fires way too early in the build process[1]). So the canned solution is:

Put this in your TFSBuild.proj:

<PropertyGroup>

<CustomizableOutDir>true</CustomizableOutDir>

<!-- TEAM PROJECT

(this turns off the ‘train-wreck’ scheme, and goes back to each project’s output going to their bin\debug or whatever folder)

Put this in any project file that you want to capture the output from:

<Target Name="AfterBuild" Condition=" '$(IsDesktopBuild)'!='true' ">

<Message Text="Copying output files from $(OutDir) to $(TeamBuildOutDir)" />

<ItemGroup>

<FilesToCopy Include="$(OutDir)\**\*.*" />

</ItemGroup>

<Copy SourceFiles="@(FilesToCopy)" DestinationFiles="@(FilesToCopy ->'$(TeamBuildOutDir)\$(AssemblyName)\%(RecursiveDir)%(Filename)%(Extension)')" />

</Target>

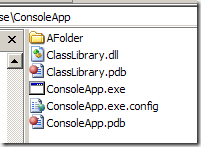

And hey presto:

A series of output folders, by assembly, containing only the artefacts relevant to that assembly, as opposed to the

Note however that with this scheme you get all files marked ‘Copy to Output Directory’ but not files marked as Content, which makes this currently unusable for deploying websites and means it’s not strictly speaking xcopy-ready[2]. Hopefully there is an easy fix to this, otherwise I’ll be diving back into the SNAK codebase where I’ve solved this previously.

[1] before serialization assemblies are generated, and before the obj folder has been copied to the bin folder. A good diagnostic is to put <Exec Command="dir" WorkingDirectory="$(OutDir)"/> into an AfterCompile target, and take a look at what you get back.

[2] I have a big thing about this, which is why I dislike the ClickOnce build output so much. Build and deploy need to be considered separately.

Here at work we have our profiles, including our documents folders, remoted to the network. It’s a constant pain, and this morning the fun was how to get the CopySourceAsHTML visual studio Add-in working as a result, since its ‘normal’ install location, My Documents\Visual Studio 2008\AddIn’ is within that remoted profile.

Irrespective of fiddling around with CASPOL and security zones, Visual Studio seems intransigent in not wanting to load AddIns from network locations. Fortunately it turns out there are a number of different places that you can put add-ins to get them to load, which is probably better explained in this conversation.

As a result I dumped the addin into

%ALLUSERSPROFILE%\Application Data\Microsoft\VisualStudio\9.0\AddIns

C:\Documents and Settings\All Users\Application Data\Microsoft\VisualStudio\9.0\AddIns

…and it worked just fine.