So finally some time last week HP shipped to web the final 64bit NTrig drivers for my TX2, and I now have a working Windows 7 Multitouch device. Sure, candidate drivers have been available from the NTrig site for ages, but I had issues with ghost touches, so they got uninstalled within a day or so as the page I was browsing kept scrolling off…

Something still bugs me – which his that if the final drivers only went to web last week, how come they’re already selling the TX2 with Windows 7 in Harvey Norman? The 32 bit driver’s still not there for download today (1/11/09). For HP to be putting drivers on shipping PCs prior to releasing them to existing owners seems bizarre, if not downright insulting.

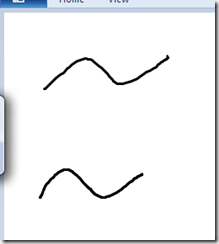

But I digress. Check this out:

Ok, it’s not my best work. But the litmus test of working multitouch is, amazingly, Windows Paint. If you can do this with two fingers, you are off and running (fingers not shown in screenshot – sorry).

So finally I can play touch properly, Win 7 style. And it rocks.

I am, for example, loving the inertia-compliant scrolling support in IE, which totally refutes my long-held believe that a physical scroll wheel is required on tablets, and makes browsing through long documents a joy. It alone would justify the Win 7 upgrade cost for a touch-tablet user. It’s not all plain sailing: I’m sure Google Earth used to respond to ‘pinch zoom’ under the NTrig Vista drivers (which handled the gestures themselves, so presumably sent the app a ‘zoom’ message like what your keyboard zoom slider would produce), but doesn’t in Win 7, at least until they implement the proper handling. But in most cases ‘things just work’ thanks to default handling of unhandled touch-related windows messages (eg unhandled touch-pan gesture messages will cause a scroll message to get posted, which is more likely to be supported).

But how to play with this yourself, using .Net?

In terms of hardware we are in early adopter land big time. The HP TX2 is half the price of the Dell XT2, and there’s that HP desktop too. Much more interesting is Wacom entering the fray with multi-touch on their new range of Bamboo tablets, including the Bamboo Fun. This is huge: at that price point ($200 USD) Wacom could easily account for the single largest touch demographic for the next few years, and ‘touching something’ has some distinct advantages over ‘touching the screen’: you can keep your huge fancy monitor, use touch at a bigger distance and avoid putting big smudges on whatever you’re looking at. (If anyone made a cheap input tablet that was also a low-rez display/SideShow device you’d get the best of both worlds of course). Finally, there is at least one project in beta to use two mice instead of a multitouch input device, which is (I believe) something that the Surface SDK already provides.

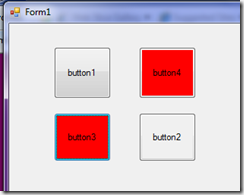

SDK-wise, unfortunately the Windows API Code Pack doesn’t help here, so we are off into Win32-land. And whilst there are some good explanatory articles around, they’re mostly C++, some are outdated from early Win 7 builds, and some are just plain incorrect (wrong interop signature in one case, which – from experience – is a real nasty to get caught by, since it might not crash in Debug builds). The best bet seems to be the fairly decent MSDN documentation, and particularly the samples in the Windows 7 SDK, which – if nothing else – is a good place to copy the interop signatures / structures from (since they’re not up on the P/Invoke Wiki yet). But just to get something very basic up and running doesn’t take that long:Again, without the fingers it’s a bit underwhelming, but what’s going on here is that those buttons light up when there’s a touch input detected over them, and I have two fingers on the screen. Here’s the guts:

protected override void WndProc(ref Message m)

{

foreach (var touch in _lastTouch)

{

if (DateTime.Now - touch.Value > TimeSpan.FromMilliseconds(500))

touch.Key.BackColor = DefaultBackColor;

}

var handled = false;

switch (m.Msg)

{

case NativeMethods.WM_TOUCH:

{

var inputCount = m.WParam.ToInt32() & 0xffff;

var inputs = new NativeMethods.TOUCHINPUT[inputCount];

if (!NativeMethods.GetTouchInputInfo(m.LParam, inputCount, inputs, NativeMethods.TouchInputSize))

{

handled = false;

}else

{

foreach (var input in inputs)

{

//Trace.WriteLine(string.Format("{0},{1} ({2},{3})", input.x, input.y, input.cxContact, input.cyContact));

var correctedPoint = this.PointToClient(new Point(input.x/100, input.y/100));

var control = GetChildAtPoint(correctedPoint);

if (control != null)

{

control.BackColor = Color.Red;

_lastTouch[control] = DateTime.Now;

}

}

handled = true;

}

richTextBox1.Text = inputCount.ToString();

}

break;

}

base.WndProc(ref m);

if (handled)

{

// Acknowledge event if handled.

m.Result = new System.IntPtr(1);

}

}

Now I’ll have to have a look at inertia, and WPF. I hear there’s native support for touch in WPF 4, so there’s presumably some managed-API goodness in .Net 4 for all this too, not just through the WPF layer. Which is just as well, because getting it working under WPF 3.5 looks a bit hairy (no WndProc to overload, which is a bad start…)

1 comment:

Hi, just thought I'd post that Wacom's multitouch support is not true multitouch. Their drivers, for instance, do not fire WM_TOUCH messages at all. Instead their driver simulates keystrokes for the various situations it can handle. I was very disappointed when I got one of these for Christmas. I should have done my homework better regarding the device.

Post a Comment