Merging in updated or newly created data from an external source is a pretty common task in SSIS. The canonical way[1] these upserts are handled is the SSIS Lookup Pattern:

Incoming rows are run through the Lookup component to identify which already exist in the destination. Those that do become UPDATEs, and those that don’t (the Lookup’s error output) become INSERTs. You can further refine this to only retrieve the inserted-or-updated rows from the datasource in the first place and save on bandwidth and so on (a future post on delta loads I think).

It’s fairly straightforward, but to my mind this has a few major problems.

Firstly, it’s a pain in the arse. For something that’s such a common requirement, this seems like a lot of shape-twiddling-clicky-click-dragging to get it up and running[1]. And I hate how brittle the OLE DB Command parameter mapping is. But that’s another story.

More concerning is that the update flow uses the OLE DB Command component, and that’s something that fires per row, unlike the OLE DB Destination that your INSERTs go to, which can batch and/or use fast load. Normally the updates are going to be in the minority compared to the inserts, but if you get a significant number of them it still costs time.

Probably the bigger problem for me with this pattern is that the performance of the lookup degrades as the data volume progressively increases. Every time you insert data there's more for the lookup to subsequently lookup against. You can (and should) limit the columns in the lookup to restrict it's memory blow out, but it's always going to just progressively get worse over time.

To me this is symptomatic that the problem is being solved at the wrong tier. Why bring large quantities of data up from the database to determine 'newness' of a row, when that's clearly something best done closer to the database itself? Especially as both sets of rows are going to the same place anyway.

So then you think about pouring data into a temporary table, then doing the upsert within Sql (based on a join), then dropping the temporary table. But you’ve got to create and drop the temporary table. What you need is a temporary table that’s transparently created for you. And what, after all, is an INSTEAD OF trigger, if not precisely that?

The Upsert View

So my solution for this is a series of 'upsert views'. An upsert view is a view with an INSTEAD OF INSERT trigger that instead of the insert performs an upsert. In this case (being Sql 2005) the upsert is an IF … INSERT … ELSE … UPDATE construct, but a 'true' Sql 2008 upsert would be a minor tweak.

An upsert view looks a bit like this:

Select all-the-updatable-columns

From sometable

Non-updatable columns include timestamp and identity columns (you'd never mark a column you brought in from a source system as an identity column, so there's no need to mess about with identity inserts).

The instead of trigger looks a bit like this:

-- Insert all the genuinely new rows

Insert into [table]

(all-the-updatable-columns)

From

Select (all-the-updatable-columns)

From inserted

Where not exists (select 1 from [table] where (keys=inserted.keys)

-- Update all the modified rows

Update [table]

Set all-the-updatable-columns=inserted.all-the-updatable-columns

From

Inserted

Inner join [table] on (keys = inserted.keys)

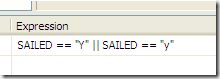

Where (concurrency_indicators <> inserted.concurrency_indicators)

(We’ll explain the keys and the concurrency indicators in a moment)

What this produces is a view over your data table that takes records INSERTED into the view and performs an UPSERT into the base table. As a result your SSIS can be simplified to this:

In reality of course you normally have more than this: probably have some other lookups and some data type conversions. However you've not had to put the Upsert functionality into the SSIS (by hand): instead the database is handling it all for you.

Provided you enable the FIRE_TRIGGERS option, this is entirely compatible with using Fast Load, and this can make a significant performance difference for the time of your package execution. You've kept all the row-matching down in Sql where it can use indexes, optimisations and the page cache, and you've reduced the memory footprint of your SSIS packages. I shame myself by not having any hard comparison data to back this up however.

We (of course) generate[2] these upsert views based on various bits of metadata we store to indicate which are the keys and the concurrency indicators. In a data warehouse it’s quite common for the primary key to be different to that defined in the source system, so the key used for row matching – the ‘business key’ – has to be indicated somewhere. Column-level extended properties seemed a fairly natural fit:

Similarly the columns that are used for concurrency control – rowversion / datetime updated – are marked as follows:

(If you don’t have one then you use the ‘fallback’ concurrency model that so many of Microsoft’s design-time tools like to kick out: you compare every column to see if it’s changed (mark all non-key columns). This is clearly a bit yuck).

And that’s pretty much it. Provided you can cope with INSERTing into a view and getting UPDATEs to your table, (which is a bit odd, but can be catered for with appropriate naming conventions) then maybe you can benefit from this approach too.

[1] Sometimes you can get the Slowly Changing Dimension wizard to kick out something similar, but that’s pretty hacky, and doesn’t like using certain types of columns as the update indicator (IRRC). It also re-creates the downstream flow every time you open it, which obviously removes any customisations you make. Avoid.

[2] The generation process was a fairly simple PowerShell script. I’ll post the important bits up one day.